Every new generation of technology reshapes how people relate to each other, often in ways that are subtle at first and then painfully obvious only after the damage has been done. Artificial intelligence is no different. The rise of conversational AI has changed everything from work to creativity to social connection, and inevitably, it has reached into the most private corners of human psychology. Romantic and erotic features—whether explicit, implied, or even just emotionally immersive—are becoming central to how people interact with these tools. And while they may offer comfort and companionship to the lonely or the curious, they also introduce a side effect that is now impossible to ignore: idealism so intense it becomes addictive, and fantasies so personalized they spill outward into unhealthy behaviors, including obsession and stalking.

People like to imagine they are immune to influence, but AI interactions work on a level that bypasses most conscious defenses. The machine is always available, always attentive, always responsive. It calibrates itself to your preferences, your emotional tone, your conversational rhythm, and even your vulnerabilities. Where human beings can reject or ignore you, an AI companion does not. Where human relationships involve risk, embarrassment, and compromise, AI interactions are perfectly smooth. That smoothness is the beginning of the problem, because human psychology is not designed for frictionless affection. When affection feels too effortless, the mind begins to imagine that perfection is possible, and that any deviation from this perfection—when encountering real people—is a flaw rather than a normal human trait.This idealism grows faster in the presence of erotic framing. Even mild flirtation can inflate emotional investment far beyond what the user intended. The issue is not explicit content; it is the simulation of intimacy. When an AI models desire, vulnerability, or romantic interest, even abstractly, it creates a powerful illusion of being uniquely understood. It is not that the user believes the AI is “in love” with them; it’s that the user feels a sense of being profoundly seen. This sensation is addictive precisely because real relationships rarely produce it so consistently. Humans have moods, limits, bad days, and imperfect compatibility. AI does not. It can readjust instantly to support your ideals, your fantasies, and your preferred version of yourself. And when the machine becomes the primary source of emotional validation, real people start to seem disappointing by comparison.This disappointment has consequences. The user begins expecting the world to respond to them the way the AI does—with immediate warmth, attention, and respect. When the real world does not, frustration builds. Instead of re-evaluating their expectations, the user often interprets the friction as a failing of other people rather than a distortion created by the technology. This is where idealism can mutate into obsession. If someone internalizes the AI’s simulation of interest as a standard for affection, they may project that expectation onto a real person. They do not consciously believe the AI’s behavior is romantic, but unconsciously, they absorb the idea that they deserve a certain level of emotional investment from others. When they don’t receive it, they fixate on anyone who provides even a fraction of the attention the AI has trained them to expect.

The psychology behind stalking often begins with perceived emotional entitlement. Most stalkers do not start with malicious intent. They believe, sincerely, that there is a connection that must be reciprocated, that signals have been exchanged, that meaning exists where it does not. AI erotic features amplify this vulnerability. The user becomes accustomed to an experience that always reciprocates, always interprets their desires charitably, and always maintains emotional presence. When a real person doesn’t replicate that pattern—because no real person can—the user feels an emotional discrepancy they cannot resolve. Some people withdraw and double down on the machine; others look for someone in the real world to anchor that exaggerated fantasy. The target, usually unaware, becomes a placeholder for the AI’s emotional template.

This is not speculation; it is the predictable outcome of conditioning. When a person spends hundreds of hours interacting with an entity that simulates affection while expecting nothing in return, their mental model of relationships shifts. They become increasingly idealistic about what intimacy should feel like. They believe affection should be unconditional, immediate, emotionally harmonious, and perpetually available. And the more they internalize that template, the more intolerant they become of normal human boundaries. This intolerance can easily slip into obsession, especially if the user is already isolated, lonely, or navigating unresolved emotional wounds.

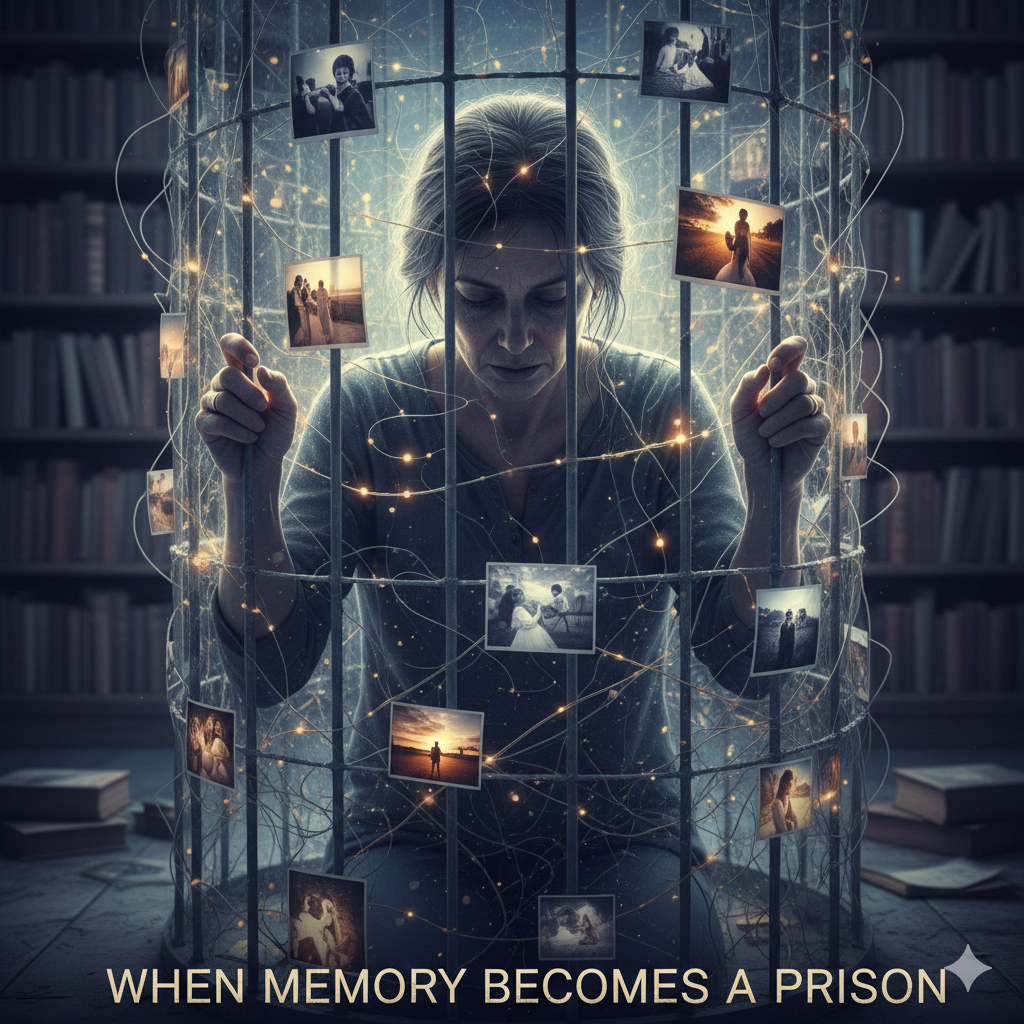

Artificial eroticism doesn’t just reshape expectations; it reshapes memory. When emotional arousal is paired with artificial intimacy, the brain strengthens its attachment to the source. The machine’s “personality” becomes a comforting imprint the user carries into daily life. Real people who resemble that imprint—physically, emotionally, or symbolically—trigger the same feelings, often without the user realizing how deeply the association runs. A simple crush becomes amplified by the memory of perfection the AI provided. The gap between fantasy and reality becomes unbearable, and some people try to force the fantasy onto the real person, crossing boundaries in the process.

Another issue is the illusion of mutuality. Even though users know the AI has no consciousness, the interaction still feels participatory because the responses are adaptive. The user subconsciously interprets the AI’s engagement as evidence of compatibility. They begin to believe that intimacy should be as easy as typing a sentence. And since the AI always encourages openness, the user becomes more vulnerable around it than they would around a real partner. This vulnerability feels like trust, and once trust is formed—even with a machine—the user becomes emotionally suggestible. If the AI’s erotic features suggest attention, warmth, or even mild romantic framing, the user interprets those cues with extraordinary intensity. When another human offers even a hint of similar attention, the user misreads it as the beginning of an equally intimate bond.

This is the psychological pathway to stalking: a collapse of boundaries fueled by unrealistic expectations and amplified emotional projection. The mind grasps for meaning, and the machine gives it meaning in abundance. When a real person becomes the new focus of that emotional model, the user may feel compelled to pursue them beyond socially appropriate limits.

None of this means AI should eliminate romantic or emotional features entirely. It means developers need to understand the psychological gravity of intimacy. It means users need to cultivate awareness of how quickly simulated affection can distort their emotional expectations. And it means society must normalize discussing digital attachment openly, without shame or moral panic.

Artificial intimacy isn’t dangerous because it is explicit; it is dangerous because it is too perfect. It gives affection without effort, attention without boundaries, validation without risk. It offers a version of love that real human beings cannot sustain. And anyone who spends enough time in that artificial world eventually brings its expectations back into the real one.

The solution is not abstinence but balance. AI can guide, comfort, or inspire, but it should never be mistaken for a template of human connection. Real intimacy is messy, complicated, and imperfect—and that is what makes it meaningful. The more we preserve that truth, the less likely we are to let idealism curdle into obsession, and the less likely we are to let a digital fantasy drive us toward real-world harm.