The rise of AI-assisted browsing and search is revolutionizing how people find and consume information. OpenAI’s browser integration is one of the most powerful of these tools — seamlessly blending search, summarization, and content understanding into a single interface. But there’s a quiet issue that most creators haven’t yet noticed: OpenAI’s browser has built-in censorship.And that censorship could have real consequences for content visibility, audience growth, and creative freedom in the years ahead.

The Hidden Filter Layer of AI Browsing

Unlike traditional browsers, OpenAI’s browsing system doesn’t just show users web pages. It interprets them, summarizes them, and often decides what parts to omit. This means creators are no longer presenting content directly to readers — they’re presenting it to an AI filter that decides what’s “appropriate” to surface.When the AI encounters certain topics, phrases, or political viewpoints, it can quietly suppress, rephrase, or omit parts of your writing. It doesn’t have to delete your content or block your site — it can simply choose not to include your ideas in its generated summaries or answers.

So while you may think your blog post is being read, it might only be partially represented to OpenAI users.

What This Means for Content Creators

In practical terms, this changes the game for writers, bloggers, and media brands. A new gatekeeper has emerged — not a search engine algorithm like Google’s PageRank, but an interpretive AI model that mediates access to your content.Here’s what that means for creators:

1. Not All Content Gets Equal Treatment

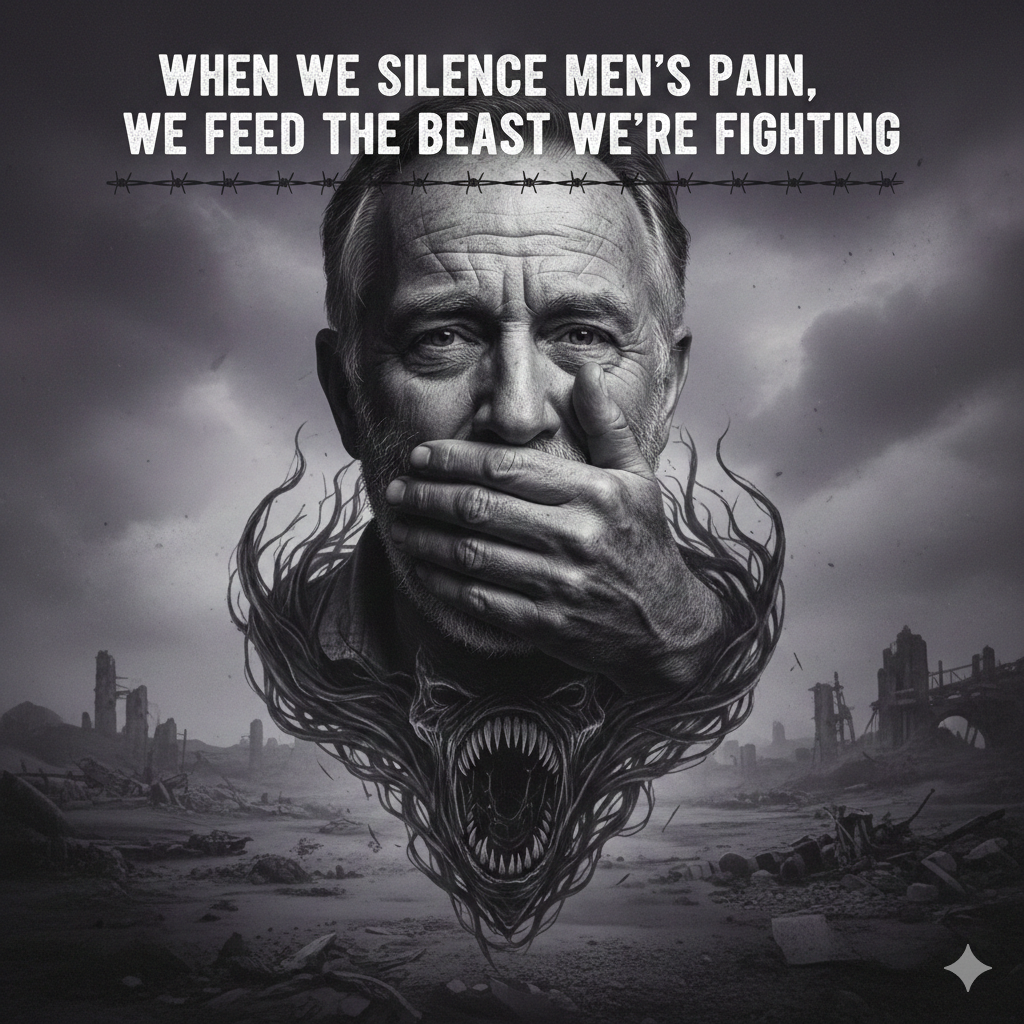

The AI browser may skip over articles that discuss controversial issues, use strong language, or frame topics in ways that trigger its moderation filters. Even factual or nuanced discussions can be downranked if they resemble “sensitive” material.

2. Summaries Can Change Your Message

OpenAI’s summaries don’t necessarily reflect the author’s intent. If your tone, humor, or context depends on subtlety, it can be lost — or worse, misrepresented — when the AI rewrites or abstracts your content for users.

3. Traffic Will Depend on AI Interpretation

As AI browsing becomes mainstream, fewer people will click through to original websites. They’ll rely on summarized results inside the AI interface. If your content doesn’t pass the filter cleanly, you’ll lose audience visibility, even if your SEO is perfect.

Why You Should Test Your Content on OpenAI’s Browser

As this new browsing model grows, content creators need to start treating OpenAI’s browser like a distribution platform, not just a search tool.Here’s why testing your content matters:

You’ll see how your content is summarized.You can check whether the AI’s version aligns with your tone and message — or if key points are being omitted.

You’ll spot filter triggers early.Some words, topics, or phrasings might cause your content to vanish from AI summaries altogether. Identifying those early allows you to adjust your phrasing while preserving your ideas.You’ll future-proof your distribution.

As more people use AI to browse the web, testing how your content appears to AI users will become as essential as mobile optimization or SEO once were.This isn’t about changing your beliefs or censoring yourself — it’s about understanding the ecosystem your content lives in. If the AI layer misrepresents or ignores your work, your message never reaches its audience in full.—The Next Frontier: AI-Optimized ContentWe’ve already lived through the age of search-engine-optimized content. Now we’re entering the era of AI-optimized content — writing that communicates not only to humans but also to the AI interpreters that increasingly mediate access to information.Creators who learn how to navigate this shift early will have a massive advantage. Testing your work through OpenAI’s browser today lets you see where the invisible lines are drawn — and how to stay authentic while ensuring your ideas aren’t filtered into oblivion.

OpenAI’s browser censorship isn’t necessarily malicious. It’s the byproduct of trying to make AI “safe” for everyone. But the result is a quiet gatekeeping system — one that can alter what people see, think, and believe without them realizing it.

If you’re a writer, journalist, or creator, the solution isn’t to rage against the system — it’s to understand it.

Start reading your own articles through OpenAI’s browser. See what’s missing. See what’s changed.

Because in the future of AI-mediated content, the only way to stay visible is to understand how you’re being filtered.